SPEC SFS®2014_vda Result

Copyright © 2016-2021 Standard Performance Evaluation Corporation

|

SPEC SFS®2014_vda ResultCopyright © 2016-2021 Standard Performance Evaluation Corporation |

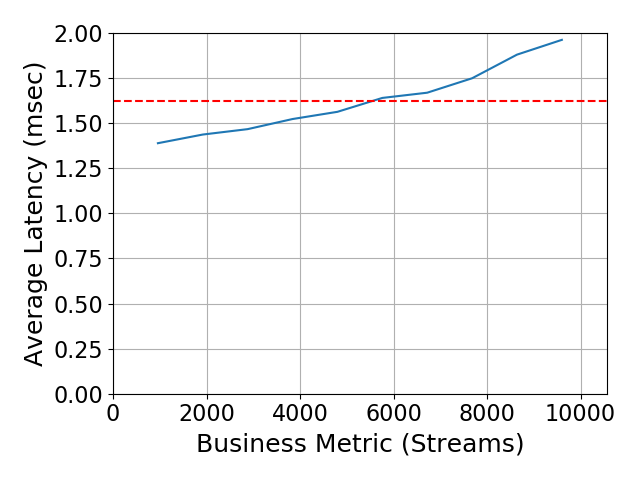

| CeresData Co., Ltd. | SPEC SFS2014_vda = 9600 Streams |

|---|---|

| CeresData Prodigy Distributed Storage System | Overall Response Time = 1.62 msec |

|

|

| CeresData Prodigy Distributed Storage System | |

|---|---|

| Tested by | CeresData Co., Ltd. | Hardware Available | March 2021 | Software Available | December 2020 | Date Tested | May 2021 | License Number | 6255 | Licensee Locations | Beijing, P.R. China. |

The CeresData Prodigy System is an enterprise distributed storage system with predictable high performance and scalability for both file and block storage. It supports a wide range of hardware architecture including X86 and ARM. The rich feature and good scalability make it a good contender for various enterprise applications, including traditional NAS/SAN, real time workloads, distributed database, etc.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 1 | Distributed Storage OS | CeresData | CeresData Prodigy OS V9 | CeresData Prodigy OS is an enterprise distributed storage system which is feature rich, standards compliant, high performance storage system. |

| 2 | 1 | Storage server | CeresData | D-Fusion 5000 SOC | 10-node storage cluster, each node has 2 Intel Xeon E5-2650 v4 (12-core CPU with hyperthreading), 256GiB memory (8 X 32GiB), 1 Mellanox ConnectX-3 56Gb/s InfiniBand HCA (1 port connected to 100Gbps IB switch), 1 external dual-port 10GbE adapter (2 ports connected to 10G Ethernet switch for cluster internal communication), 1 4GB SATADOM to hold Prodigy OS. |

| 3 | 11 | Storage Client Chassis | AIC | HA401-LB2 | Dual-node 4U chassis, 22 nodes in total, 20 nodes configured as client, 1 node as prime server, 1 node unused. Each client node has 2 Intel Xeon E5-2620 v4 (8-core CPU with hyperthreading), 384GiB memory (12 X 32GiB), 1 Mellanox ConnectX-4 100Gb/s InfiniBand HCA (1 port connected to 100Gbps IB switch). |

| 4 | 15 | JBOD enclosure | CeresData | B702 | 2U 24 Bays 12G SAS Dual Expander JBOD enclosure to accommodate most of the SSDs deployed to the storage cluster. |

| 5 | 1 | 100Gbps IB Switch | Mellanox | SB7790 | 36 Ports, 100Gbps InfiniBand switch. |

| 6 | 1 | 10GbE Switch | Maipu | MyPower S5820 | 48 Ports, 10Gbps Ethernet switch. |

| 7 | 1 | 1GbE Switch | TP-LINK | TL-SF1048S | 48 Ports, 1Gbps Ethernet switch. |

| 8 | 30 | SAS HBA | Broadcom | SAS9300-8e | SAS 3008 Fusion MPT2.5, 8-port 12Gb/s HBA. Each storage node had 3 external SAS9300-8e HBA installed. Each HBA used 1 cable to connect to a JBOD enclosure. |

| 9 | 21 | 100G IB HCA | Mellanox | CX456A | ConnectX-4 EDR +100GbE InfiniBand HCA, Each client had 1 IB HCA installed, prime node had 1 IB HCA installed. |

| 10 | 10 | 56G IB HCA | Mellanox | CX354A | ConnectX-3 FDR InfiniBand +40GigE HCA, Each storage node had 1 IB HCA installed. |

| 11 | 10 | 10G NIC | silicom | PE210G2SPI9-XR | 10GbE dual-port NIC. Each storage node had 1 external 10GbE dual-port NIC installed. |

| 12 | 450 | SSD | WDC | WUSTR6480ASS200 | 800GB WDC WUSTR6480ASS200 SSD. 360 were in the 15 JBOD enclosures, the remaining were installed in the front bays of storage nodes. |

| Item No | Component | Type | Name and Version | Description |

|---|---|---|---|---|

| 1 | Storage Node | Prodigy OS | Prodigy V9 | All the 10 storage nodes were installed with the same Prodigy OS and configured as a single storage cluster. |

| 2 | Client | Operating System | RHEL 7.4 | All the 20 client nodes and the prime node were installed with the same RHEL version 7.4. |

| None | Parameter Name | Value | Description |

|---|---|---|

| None | None | None |

The hardware used the default configuration from CeresData, Mellanox, etc.

| Client | Parameter Name | Value | Description |

|---|---|---|

| /proc/sys/vm/min_free_kbytes | 10240000 | Set on client machines. The shortage of reclaimable pages encountered by InfiniBand driver has caused page allocation failure and spurious stack traces printed into syslog. It was increased to a larger value so that this kind of error went away. |

| proto | rdma | The clients used this parameter to mount storage directories with NFSoRDMA. |

| wsize | 524288 | Namely 512KiB. Used as clients' NFS mount option. |

Specified as above.

None.

| Item No | Description | Data Protection | Stable Storage | Qty |

|---|---|---|---|---|

| 1 | 800GB WDC WUSTR6480ASS200 SSD. 360 were in the 15 JBOD enclosures, the remaining were installed in the front bays of storage nodes. | 8+1 | yes | 450 |

| Number of Filesystems | 1 | Total Capacity | 285 TiB | Filesystem Type | ProdigyFS |

|---|

A single Prodigy distributed file system was created over the 450 SSD drives across the 10 storage nodes. Any data in the file system could be accessed from any node of the storage cluster. The disk protection scheme was configured as 8+1.

The SSDs were configured into groups, each group had 9 disks with 1 disk failure tolerance. The data and parity were striped across all disks within the group. The single Prodigy distributed file system was created over all these groups.

| Item No | Transport Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | 56Gbps IB | 10 | The solution used a total of 10 56Gbps IB ports from the storage nodes (1 port for each node) to the IB switch. |

| 2 | 100Gbps IB | 21 | The solution used a total of 21 100Gbps IB ports from the clients (1 port from each of 20 client,1 port from prime) to the IB switch. |

Each of the storage nodes has a dual ports 56Gbps IB HCA with only 1 port used during the test. Each of the clients has a dual ports 100Gbps IB HCA with only one port used during the test.

| Item No | Switch Name | Switch Type | Total Port Count | Used Port Count | Notes |

|---|---|---|---|---|---|

| 1 | Mellanox SB7790 | 100Gbps IB | 36 | 31 | 21 100G IB cables from clients, 10 56G IB cables from storage cluster nodes. |

| Item No | Qty | Type | Location | Description | Processing Function |

|---|---|---|---|---|---|

| 1 | 20 | CPU | Prodigy Storage | Intel(R) Xeon(R) E5-2650 v4 @ 2.20GHz, 12-core CPU with hyperthreading | NFS (over RDMA) Server |

| 2 | 40 | CPU | Load Generator, Client | Intel(R) Xeon(R) E5-2620 v4 @ 2.10GHz, 8-core CPU with hyperthreading | NFS (over RDMA) Client |

| 3 | 2 | CPU | Prime | Intel(R) Xeon(R) E5-2620 v4 @ 2.10GHz, 8-core CPU with hyperthreading | SPEC SFS2014 Prime |

Each node had 2 CPUs.

| Description | Size in GiB | Number of Instances | Nonvolatile | Total GiB |

|---|---|---|---|---|

| Prodigy storage node memory | 256 | 10 | V | |

| Client memory | 384 | 20 | V | |

| Prime memory | 384 | 1 | V | Grand Total Memory Gibibytes | 10624 |

Each storage node had 8 X 32GiB RAM installed, each client had 12 X 32GiB RAM installed, the prime node had 12 X 32GiB RAM installed .

The Prodigy System uses disks to store all the data writes. Once the data write is acknowledged, it has been committed to the disk. Further, the disks under test have been configured with Data Protection of "8+1".

The 10 storage nodes were each installed with standard Prodigy OS and then configured into a single storage cluster. None of the components used to perform the test were patched with Spectre or Meltdown patches (CVE-2017-5754, CVE-2017-5753, CVE-2017-5715).

None.

All 20 clients and 10 storage nodes were connected via a single IB switch. Through the IB switch, any client can directly access any storage data from any node in the storage cluster.

None.

None.

Generated on Tue Jun 22 18:49:55 2021 by SpecReport

Copyright © 2016-2021 Standard Performance Evaluation Corporation